AI Interpretability and Trustworthiness

Insight 02 - 3 Minute read

When attempting to define trust within AI models, there are many differing stances within the field.

As artificial intelligence becomes increasingly prolific in many systems that once relied on human judgement, it's important to examine its decision making and performance throughout its processes. Accuracy is a critical factor when emphasizing AI capabilities, as it serves as a benchmark for future use, whether through correlation with human judgment or prior trials. However, accuracy alone is insufficient when models operate as opaque mechanisms, producing outcomes without intelligible reasoning. For example, in medical diagnosis systems, a model that predicts disease with high accuracy but cannot explain which clinical features influenced its decision limits a clinician’s ability to validate, trust, and safely act on its output. Interpretability becomes not a supplementary feature, but a prerequisite for responsible deployment.

The Black Box Problem

Black Box models are those whose internal decision-making processes are obscured or incomprehensible to humans. Though their deep and complex neural networks offer high accuracy, which is objectively quite rewarding, it lacks a fundamental component aforementioned.

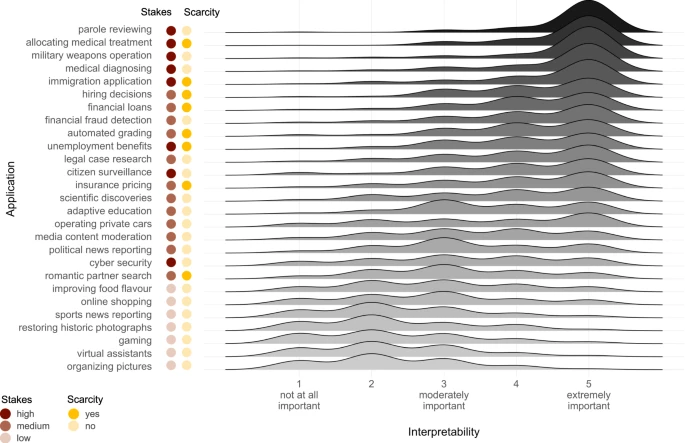

Below is a figure that highlights the importance of transparency within artificial agents.

Source: Nussberger et al., Nature Communications (2022).

When analyzing the data, a prominent question emerges.

How can reliable behavior be differentiated from coincidental correlation?

Transparency as a Form of Epistemology

Transparency and trust become key factors for dismantling the black box system, a way for practitioners in the field of machine learning to gain a deeper understanding for an AI's decision making. Often implemented through post-hoc explanations, methods that illuminate an agent’s internal structure are essential for future development.

Without causal insight, errors remain unexplained, biases undetected, and failures unpredictable. Interpretability seeks to recover this missing structure by revealing how inputs influence outcomes, restoring a degree of epistemic accountability to algorithmic decision-making.

Interpretability as a Catalyst for Modern AI Agents

Interpretability is not a solution, but a critical component when identifying a machine’s inner workings; a necessary condition for trust.

A system that cannot justify its decisions cannot be fully trusted, regardless of its empirical performance.

The framework of trust within machine learning is an ever expanding field. When machines are embedded in domains where error carries irreversible consequences, interpretability becomes a primary focus when separating accuracy to coincidental correlation.